We can’t turn off our tendency to form hypotheses…we can increase our awareness, encourage alternative explanations and ensure any causal interpretations are fully supported by facts.

by Loren Yager, Ph.D., Instructor, United States Government Accountability Office Center for Audit Excellence

The behavioral economics field has developed valuable insights on when (and how) people make systematic errors in decision-making due to cognitive biases and fallacies. These insights can deepen the understanding of various factors affecting decision-making and are particularly important to audit work, which aims to be independent and objective.

In 2002, psychologist Daniel Kahneman earned the Nobel Prize in Economics for his work concerning human judgement and decision-making, and his book, “Thinking Fast and Slow,” has become required reading across many disciplines in colleges and universities. Economist Richard Thaler won the same prize in 2017 for his efforts in establishing decision-making as predictably irrational in ways that defy traditional economic theory. By simply observing colleagues, both researchers found even well-informed, well-educated professionals are subject to systematic errors and biases. Behavioral economics traces these errors to the design of the human mind—concluding we are all hardwired to make such errors.

While behavioral economics better explains decision-making across a wide range of situations, it’s not necessarily easy to apply those insights to work environments. One challenge is the field has generated a large number of cognitive biases and fallacies affecting us, which can be overwhelming when trying to determine which biases and fallacies are most applicable in professional environments. A second challenge is there has been little effort to map biases and fallacies to typical auditing tasks and decisions.

This article addresses both challenges and attempts to assist auditors in applying key insights to think more critically by:

- Outlining relevant biases and fallacies that affect decision-making, and

- Suggesting ways insights can be utilized during the audit process.

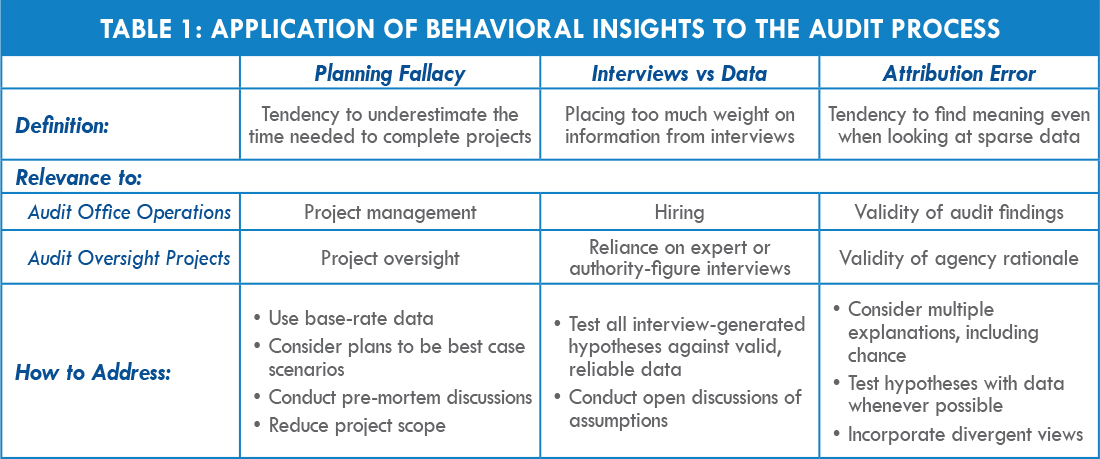

Which cognitive biases might affect auditor planning decisions, influence the use of testimonial evidence, or affect message development and conclusions? How can an auditor minimize these effects? Table 1, “Application of Behavioral Insights to the Audit Process,” provides a few examples and recommendations.

The Planning Fallacy

The planning fallacy is a key behavioral economics insight where teams consistently underestimate time required to complete a project, a phenomenon often resulting in resource misallocation or wasted effort.

Kahneman relates his experience leading a team charged with developing a new academic curriculum. The team, ignoring the prior experiences of members (base-rate information), missed the estimated project completion date by years—so far behind schedule, in fact, the completed curriculum was never used.

In audit work, relevant base-rate information from prior audits is usually accessible, yet auditors still fall victim to the optimism researchers find so common in planning. The planning fallacy, applicable to numerous audit types, including agency acquisition, tends to result in projects that take longer, deliver less and cost more than originally planned.

Research has identified techniques to address the planning fallacy. One is to collect data on prior projects to estimate new project schedules and budgets. Although it is important to provide more realistic schedules, research also demonstrates that using short- and intermediate-term milestones is essential in keeping teams project focused.

Other techniques include asking whether project plans reflect best-case (rather than likely) scenarios and holding pre-mortem meetings, where participants are encouraged to imagine new projects are months behind schedule and identify potential causes.

Interviews vs. Data

Behavioral economics provides a second key insight for auditors regarding the common tendency to place more weight on interviews and testimonials than on documents and data. While the audit framework already lists testimonial evidence below documentary evidence and data, the research provides additional reasons to be cautious.

One set of biases related to testimonial evidence involves the interviewee. Research shows that memories of events are often faulty (even when attempting to be truthful). The unreliability of such evidence is so well documented that the Department of Justice developed stricter guidelines on using lineups and photographs to reduce the risk of wrongful convictions.

A second set of biases involves those held by the interviewers (audit team members). These include authority bias when the team is interviewing high-level officials or confirmation bias where more weight is given to interviews supporting our initial hypotheses.

In both cases, research cautions against relying on testimonial evidence, which reinforces the importance of collecting as much systematic evidence as possible, particularly to test initial hypotheses generated in interviews against relevant and reliable data.

Attribution Error

Psychologist Paul Nisbett, also active in behavioral economics, believes the fundamental attribution error is the most serious of all biases. In his research, Nisbett demonstrates no causality exists in many cases—just random data variation.

“We are superb causal hypothesis generators. Given an effect, we are rarely at a loss for an explanation,” notes Nisbett in his book, “Mindware: Tools for Smart Thinking.”

Specific fundamental attribution error biases include seeing patterns in data and events when there are none, neglecting statistical or probability effects, and using schemas or stereotypes when making judgments.

For example, in comparing male birth percentages in small to large hospitals in China, it would be much easier to find small hospitals with monthly averages at 70% or above (just as flipping a coin ten times leads to much more likely results of 7 or more tails (17.2%) than flipping a coin 20 times and achieving 14 or more tails (5.8%). This example illustrates how a person might overlook different sample sizes, determine a causal explanation, and hypothesize some kind of intervention is needed.

Nisbett also discusses how we use schema or stereotypes—rules systems and templates—to help us make sense of the world and act appropriately in different situations. However, we are generally unaware of how schema might affect our decisions, such as education bond issues receiving more votes when the voting location is a school.

Research has also shown that we tend to discard base-rate information (probabilities) once we have conducted an interview, even though base-rate information is often much more predictive than schema-based judgments.

We can’t turn off our tendency to form hypotheses, and forming hypotheses can be helpful in early audit process stages. However, we can increase our awareness of this tendency, encourage alternative explanations and ensure any causal interpretations incorporated in reports are fully supported by facts.

Summary

The Nobel Prize for Economics is generally awarded decades after the original contributions are published when it is clear the ideas have influenced thinking across the discipline. In the case of behavioral economics, impact has been much broader, as insights have extended into numerous other fields and professions, such as auditing. As careful and critical thinking are important aspects in audit work, greater awareness of these concepts would benefit the auditing profession.